Responsible AI

Responsible AI refers to “practices” and “principles” that ensure that AI systems are “transparent” and “trustworthy” while “mitigating potential risks and negative outcomes”.

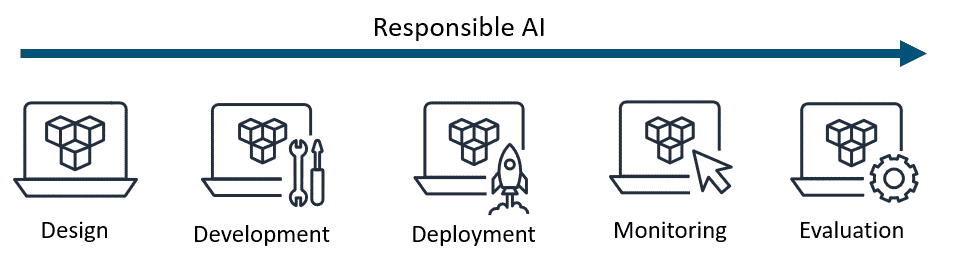

These responsible standards should be considered throughout the entire lifecycle of an AI application. This includes the initial design, development, deployment, monitoring, and evaluation phases.

Source: AWS Skill Builder

Responsible AI is not exclusive to any one form of AI. It should be considered when you are building “traditional” or “generative” AI systems.

Traditional AI

Traditional machine learning models perform tasks based on the data you provide. They can make predictions such as ranking, sentiment analysis, image classification, and more.

However, each model can perform only one task. And to successfully do it, the model needs to be carefully trained on the data. As they train, they analyze the data and look for patterns. Then these models make a prediction based on these patterns.

Some examples of traditional AI include recommendation engines, gaming, and voice assistance.

Generative AI

Generative artificial intelligence (generative AI) runs on “foundation models (FMs)”. These models are pre-trained on massive amounts of general domain data that is beyond your own data.

They can perform multiple tasks. Based on user input, usually in the form of text called a prompt, the model actually generates content. This content comes from learning patterns and relationships that empower the model to predict the desired outcome.

Some examples of generative AI include chatbots, code generation, and text and image generation.

Generative AI offers business value

- Creativity: Create new content and ideas, including conversations, stories, images, videos, and music.

- Productivity: Radically improve productivity across all lines of business, use cases, and industries.

- Connectivity: Connect and engage with customers and across organizations in new ways.