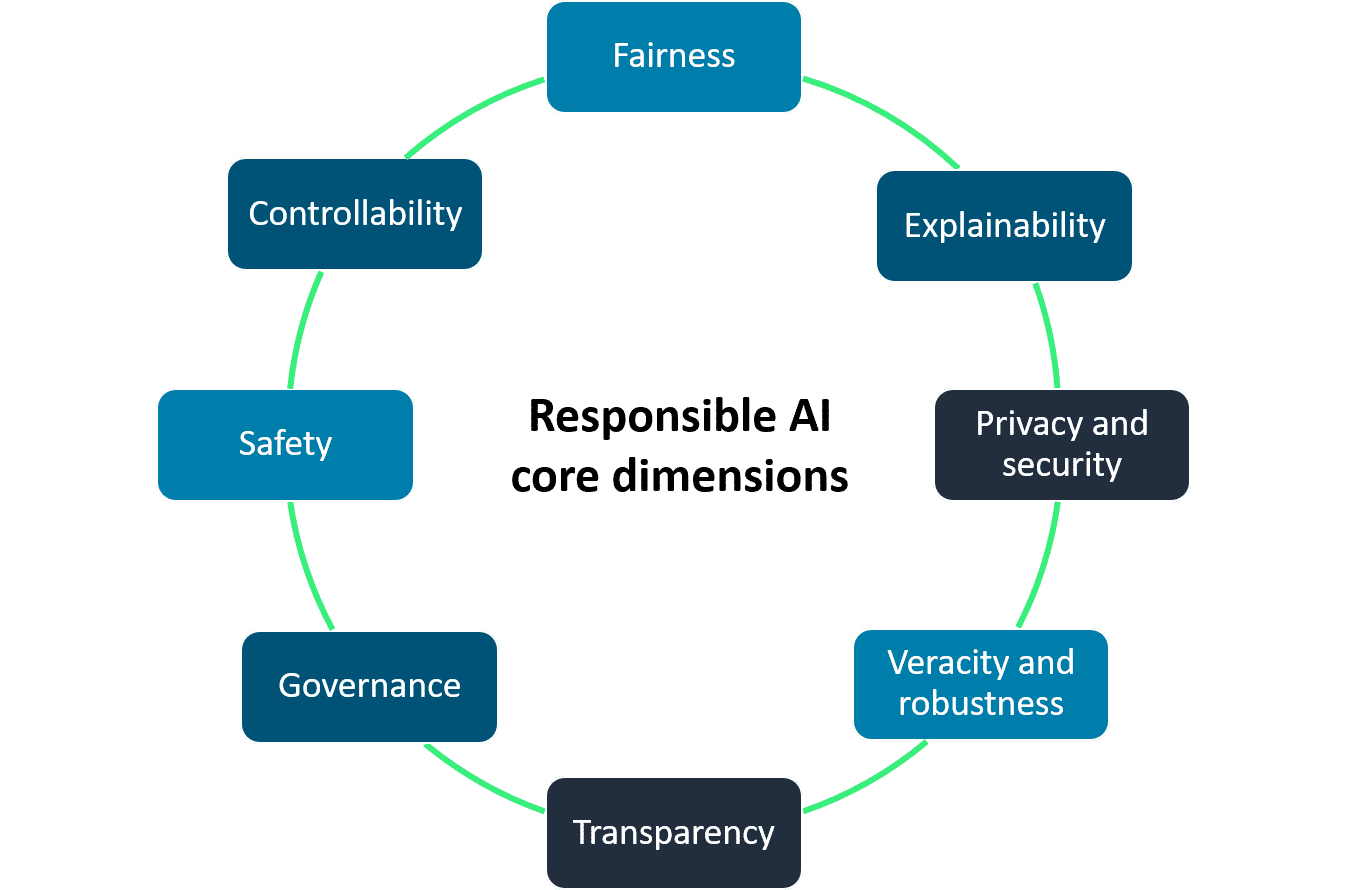

Core Dimensions of Responsible AI

Source: AWS Skill Builder

- Fairness

AI systems promote inclusion, prevent discrimination, uphold responsible values and legal norms, and build trust with society.

To create systems suitable and beneficial for all. - Explainability

Clearly explain or provide justification for its internal mechanisms and decisions so that it is understandable to humans.

Humans must understand how models are making decisions and address any issues of bias, trust, or fairness. - Privacy and security

Data is protected from theft and exposure.

At a privacy level, individuals control when and if their data can be used.

At the security level, it verifies that no unauthorized systems or unauthorized users will have access to the individual’s data. - Transparency

Transparency communicates information about an AI system so stakeholders can make informed choices about their use of the system. Some of this information includes development processes, system capabilities, and limitations.

It provides individuals, organizations, and stakeholders access to assess the fairness, robustness, and explainability of AI systems. They can identify and mitigate potential biases, reinforce responsible standards, and foster trust in the technology. - Veracity and robustness

AI system operates reliably, even with unexpected situations, uncertainty, and errors.

resilient to changes in input parameters, data distributions, and external circumstances.

retain reliability, accuracy, and safety in uncertain environments. - Governance

Governance is a set of processes that are used to define, implement, and enforce responsible AI practices within an organization.

Governance addresses various responsible, legal, or societal problems that generative AI might invite.

For example, governance policies can help to protect the rights of individuals to intellectual property. - Safety

development of algorithms, models, and systems in such a way that they are responsible, safe, and beneficial for individuals and society as a whole.

designed and tested to avoid causing unintended harm to humans or the environment. Things like bias, misuse, and uncontrolled impacts need to be proactively considered. - Controllability

ability to monitor and guide an AI system’s behavior to align with human values and intent. It involves developing architectures that are controllable, so that any unintended issues can be managed and addressed.

helps mitigate risks, promote fairness and transparency, and ensure that AI systems benefit society as a whole.

Business benefits of responsible AI

- Increased trust and reputation

Customers are more likely to interact with AI applications, if they believe the system is fair and safe. This enhances their reputation and brand value. - Regulatory compliance

As AI regulations emerge, companies with robust ethical AI frameworks are better positioned to comply with guidelines on data privacy, fairness, accountability, and transparency. - Mitigating risks

Responsible AI practices help mitigate risks such as bias, privacy violations, security breaches, and unintended negative impacts on society. This reduces legal liabilities and financial costs. - Competitive advantage

Companies that prioritize responsible AI can differentiate themselves from competitors and gain a competitive edge, especially as consumer awareness of AI ethics grows. - Improved decision-making

AI systems built with fairness, accountability, and transparency in mind are more reliable and less likely to produce biased or flawed outputs, which leads to better data-driven decisions. - Improved products and business

Responsible AI encourages a diverse and inclusive approach to AI development. Because it draws on varied perspectives and experiences, it can drive more creative and innovative solutions.